This article is part of a series: Output Checking

Our previous post in this series discussed statistical disclosure control (SDC) and how it is used by OpenSAFELY to ensure ‘Safe Outputs’. Here we will outline the OpenSAFELY output checking service: who it involves, how our output checkers are trained and an overview of the output checking workflow.

Who is the OpenSAFELY output checking team

All of the OpenSAFELY output checking team are researchers and users of the OpenSAFELY platform. The core team is made up of a dozen trained output checkers from the Bennett Institute for Applied Data Science, but researchers from the London School of Hygiene and Tropical Medicine and the University of Bristol have also been trained in output checking with OpenSAFELY.

How are the output checking team trained?

All users of the OpenSAFELY platform are required to be an accredited researcher, gained through Safe Researcher Training (SRT) provided by ONS. This is a course intended for researchers applying for access to the ONS Secure Research Service and the UK Data Service Secure Lab, but is also suitable for researchers using other Trusted Research Environments (TREs) such as OpenSAFELY. It covers data security and personal responsibility, as well as the Five Safes model (see the first blog in this series), including basic statistical disclosure control (SDC) (see the second blog in this series). However, this training is not domain specific and is limited to simple examples. In practice, in domains such as health data research, analysis approaches and their outputs are more complex. To fully understand the disclosure risk for these outputs as well as the logistical steps such as managing researcher relationships and responding to requests, extra training is required.

Until recently, this training has been learned ‘on the job’, with reference to existing guidelines, but as the number of TREs has increased, there has been increasing demand for dedicated training of researchers to check research outputs for disclosure concerns. In 2019, a team from the University of West of England (UWE), led by Prof. Felix Ritchie and specialising in safe data access and SDC, was commissioned by the Office for National Statistics to do just this. This new course is an interactive session that covers both the statistical (i.e. what is the risk of this type of output?) and operational (i.e. how should I respond if someone is unhappy that an output has been rejected?) of output checking. In collaboration with the team from UWE, we have introduced some OpenSAFELY specific example outputs to this course, which >40 OpenSAFELY researchers have now attended.

This course is followed up by a formal assessment, which consists of a set of output review requests as well as an essay-style question that we require all output checkers to pass before doing any output checking for the OpenSAFELY platform.

Output checking workflow

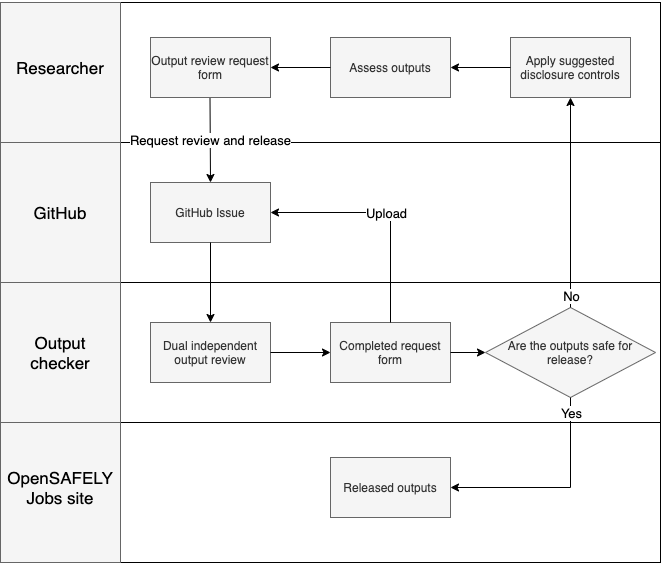

An end-end summary of the OpenSAFELY output checking service is shown below.

1. Researchers review their study outputs and request a release

The output checking service starts with the researcher! Researchers are able to view their analysis outputs from within the OpenSAFELY level 4 server. From here, they can review and apply disclosure controls to their outputs.

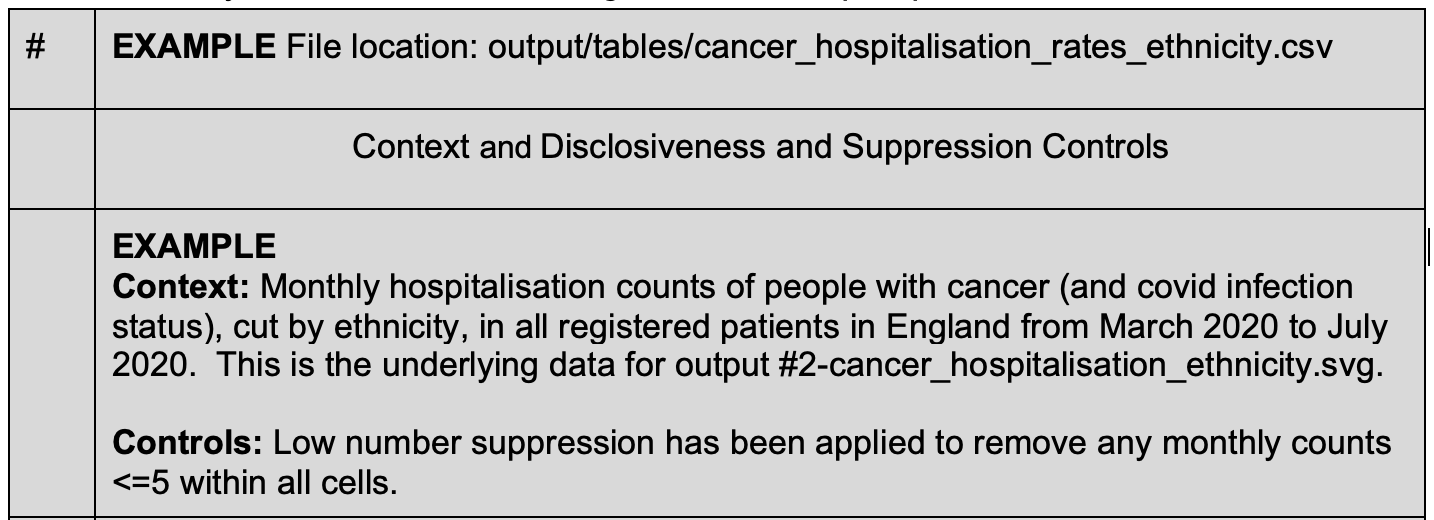

Once they are satisfied that files are safe for release they can fill in a review request form. This review form reiterates the expectations of the researcher, including minimising the outputs they request for release, applying relevant disclosure controls and assuring the requested files adhere to permitted study results policy. For each file requested, the researcher is expected to provide a description of what the file shows, as well as any disclosure controls they have applied to the outputs, which allows for principles based disclosure control. An example can be seen below.

2. Output review request is created

Incoming review requests are tracked using GitHub issues, which are widely used by development teams for project management. This was a pragmatic choice that was made for the following reasons:

- They are already used widely by the OpenSAFELY team for both core platform development as well as OpenSAFELY research.

- They provide out of the box comment functionality that allows easy communication between reviewers.

- They provide the ability to assign output checkers to open issues and provide notifications of updates to these assigned reviewers.

- Issue templates can be created to allow a consistent recording process across reviewers.

- It provides the ability to track various metrics such as the time to review (see our next blog).

When a review is requested, a new GitHub issue is created, the review form is uploaded and two reviewers are assigned to a review.

3. Review of requested outputs

Output checkers, who also have access to results in the secure server then undertake independent review of the outputs. For each file they review, they are asked to mark it as approved, approved subject to change or rejected and provide commentary on their decision. These are explained below.

- Approve — output meets disclosure requirements and is safe to be released

- Approve subject to change — output is an acceptable type for release, but has outstanding disclosure issues that must be addressed before release

- Reject — output is not an acceptable type for release. An example is the release of practice-level data which does not meet the permitted study results policy

4. Review response and release of files

Once reviewed, the completed review request is sent back to the researcher and approved outputs are released from the secure server to the OpenSAFELY jobs site by a trained output checker. From here, researchers assigned to a particular project can view and download the released outputs. If one or more outputs are approved subject to change, researchers are asked to address the disclosure issues and submit a new review form, detailing any changes they make. We aim to respond to all review requests within 7 days, but often complete them much sooner (see our next blog for more detailed statistics on this).

Summary

This blog has explained who the OpenSAFELY output checking team is and given some insight into how the output checking service is run. In the next blog, we will reflect on our experience running this service and look at some metrics related to the service, including the number of review requests we have completed, the average time taken to complete review requests and the time our reviewers spend on reviewing outputs.